Guess text encoding with scalar product

Playing with an 8-bit to multibyte text converter recently I came across with a rather interesting problem. The converter is intended to help with converting legacy text data encoded with older monobyte character encodings into UTF-8. Yes, the converting process itself is not a big deal. In fact it's a very basic and straightforward program a student would usually challenge him- or herself just after they accomplished the "Hello World!" one. However, the interesting part here is the fact that that "old encoding" can be one of a bunch of variants of slightly different ad hoc character sets. And to make things worse, the user doesn't usually know which code page exactly the original text was encoded with. And as always the user is reluctant to make any effort to find it out before using the tool. Where such ad hoc code pages came from and why they been used at all is a whole different story but it's not the point here. The point is how having only a piece of plain text to determine which code page it is encoded with. So...

Yes, I know that There Ain't No Such Thing As Plain Text. But I can assume that the entered text is written in a particular language. Let's assume that as an initial condition: the application is designed as a helper tool for those who manipulate text data in Sakha (ISO 639: sah). No problem if you don't know what Sakha is. As this article is in English let's use an analogy. Imagine that we have 5 different character encodings where most of the English alphabet is encoded the same way as ASCII except just 3 letters, let's say, A, B and C. And among these three our character sets sometimes repeat one another, intersect one with another and replace each other. And for the sake of example let's ignore upper and lower case. So we've got:

Table 1. Character sets.

A |

B |

C |

D |

E |

etc. |

|

|---|---|---|---|---|---|---|

ASCII |

65 |

66 |

67 |

68 |

69 |

... |

John's encoding |

67 |

65 |

66 |

68 |

69 |

... |

Mary's encoding |

67 |

66 |

65 |

68 |

69 |

... |

Jack's encoding |

65 |

66 |

176 |

68 |

69 |

... |

Bob's encoding |

64 |

176 |

255 |

68 |

69 |

... |

We don't know yet which encoding the text was originally encoded with. But we know that the text is in English and it was created with an application that used one of the above character sets. Also we can, or the computer that we're designing the algorithm for is able to, count the occurrences of each letter of the alphabet assuming each charset at a time and then compare the results. Looping and counting, that's what computers do best. But, compare to what? - That's a very good question. Well, in the best scientific traditions the answer should be: "Compare to the correct one indeed". But how useful is this answer. Let's see. How about if "the correct one" is a statistically average English text in a known encoding? Let's get a large enough article in English encoded in a known charset and dully count the occurrences of each letter in it. E.g. an article in UTF-8 at Wikipedia contains the following frequencies:

Table 2. Sampled frequencies in a real English text.

A |

B |

C |

D |

E |

etc. |

|

|---|---|---|---|---|---|---|

Occurrences |

5947 |

911 |

2352 |

2573 |

7954 |

... |

If curious the article at Wikipedia was "English language" but it doesn't matter. Of course, these numbers may vary from one article to another. The ratio however should stay about the same deviating slightly maybe depending on various things like style, topic discussed, vocabulary used etc. But deviation will be rather subtle I hope. At least subtle enough to have the algorithm work. That's the pivoting idea of this project. So let's count. Exempli gratia I'll give you a different text (The Declaration of Independence) but won't tell you its character encoding yet. And let's pay attention only at 3 letters - A, B, C in all character sets and ignore everything else. Firstly, because the codes for the remaining (26-3) = 23 letters are the same. And secondly, to simplify our example calculations.

Table 3. Frequencies as if encoded in different character sets.

A |

B |

C |

|

|---|---|---|---|

ASCII |

562(65) |

105(66) |

213(67) |

John's encoding |

213(67) |

562(65) |

105(66) |

Mary's encoding |

213(67) |

105(66) |

562(65) |

Jack's encoding |

562(65) |

105(66) |

0(176) |

Bob's encoding |

0(64) |

0(176) |

0(255) |

Even a brief glance at the Table 3 suggests us that the text is definitely not in Bob's encoding because none of his three codes occurs in the input data. Although in theory it may happen to a very short text but here we can see that other character sets show much better chances than Bob's. Similarly, Jack's encoding is also less likely candidate as it claims no "C" letter in the whole text (in The DeClaration of IndependenCe, duh!). OK, all this is a good guesswork. Now let's prove it scientifically.

The idea

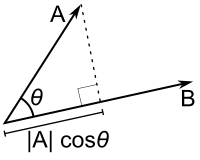

Let's consider the rows of the Table 3 as vectors in an Euclidean space. In that case the most correct vector, that we can bet on being the encoding we're looking for, will be the one which is closest to a known one, ideally collinear with it. And the known one would be the one that we sampled previously, see Table 2. ఇప్పుడు two vectors are considered collinear when the angle θ between them = 0.0, or when

cos( θ )=1.0

In other words, closer the vectors to each other less the angle θ , or cos( θ )→1.0. But how are we going to find the angle θ? Luckily for us, it happens that

cos( θ )=( x · y )/(‖ x ‖‖ y ‖)

where x and y are the two vectors. The dot product (scalar product) of two vectors is calculated by summing up together all products of the corresponding coordinates. And the length is basically the square root of the dot product of a vector with itself. Pretty simple, uh? Now, taking each row from Table 3 as the given vector we can calculate angle θ with the sample vector from Table 2. Actually, cos( θ ) will suffice.

The implementation

As soon as we've got the idea the implementation is very simple. Even a machine can do the job. And it's better to give it to the machine. Basically what we need is to have a machine to:

Take each row from Table 3

Multiply its column A by column A from Table 2, same for BB, CC

Add the three products alltogether - this is the Dot Product

Multiply each column by itself, sum them and get the sqrt from it - this is the Length

Get the Length of the sample vector the same way from Table 2

Divide the Dot Product by both the lengths - this is the Cosine

Here are the results:

Table 4. Cosine values.

cos( θ ) |

|

|---|---|

ASCII |

0.999405 |

John's encoding |

0.51397 |

Mary's encoding |

0.681061 |

Jack's encoding |

0.930862 |

Bob's encoding |

NaN |

Now we just have to choose which of them is the closest to 1.0. Since the maximum value a cosine can ever get is also 1.0, we may just get the absolute max from the list and that will be our best candidate. In the Table 4 it just happens to be ASCII with the value 0.999405. That is very close to 1.0 I'd say. Of course it was ASCII, the original code page. Where I'd find the text of The Declaration of Independence encoded in John's or Mary's or any other imaginary encodings? If I did however, I tell you, the algorithm would work and we'd see the true original code page as clearly as .999.

Here is one real implementation of this logic in JavaScript: PseudoYKT decoder at GitHub. It's a Google Gadget that being embedded in a Google Site's page can help to decode a legacy text from one of the ad hoc character sets automatically detecting the correct one. I doubt if anyone can use this gadget as it is in an application other than decoding text in Sakha from very custom charsets. But I'd definitely be happy if my tiny project inspired somebody to a project of their own. I don't mind if you use any piece of this code or the whole gadget for any purposes. Please let me know if you did. Thank you.

PS. Same or similar approach can be used whenever you want to recognize a piece of information by calculating its proximity to known samples. What is needed is to find a way to quantify its identifying features. It can be as simple as counting the occurrences or somewhat more sophisticated as finding Fourier series coefficients.